Preparing for Architecture Context

How I turned infrastructure relationships into a graph that AI agents can actually query - and found a 'fixed' bug still running in production.

This is a short builder diary from my startup journey: a bunch of customer conversations about "architecture context" (and the usual incident-review pain) turned into an experiment I couldn't stop thinking about.

Skip ahead?

The cloud isn't a list of resources. It's a web of relationships.

Kubernetes isn't "a cluster." It's code → image → workload → pod → node → exposure → identity.

Once those relationships live in a graph, your assistants/agents can stop guessing and start answering.

The model (one sentence)

Layer 1: baseline graph (Cartography + simple queries)

This is the "out of the box" win: Cartography syncs cloud resources into Neo4j so you can query relationships, not inventories.

What the baseline graph gives you:

(EC2Instance) ──MEMBER_OF_EC2_SECURITY_GROUP──> (EC2SecurityGroup)

(EC2SecurityGroup) ──INGRESS──> (EC2SecurityGroupRule {cidr: "0.0.0.0/0", port: 443})

(EC2Instance|AWSUser|AWSRole) ──STS_ASSUMEROLE_ALLOW──> (AWSRole)MCP tools you can build on Layer 1:

// "what's risky right now?"

reality_snapshot(region?: string)

// "is this reachable from the internet?"

reachability_explain(target_type: string, target_id: string)

// narrow permission checks

principal_can(principal_type, principal_id, action, resource_type?, resource_id?)

// diff STS_ASSUMEROLE_ALLOW chains between two selections

new_privilege_paths(...)

// power-user escape hatch

execute_cypher_readonly(cypher: string)Layer 2: heavy lifting (Kubernetes + "which code runs where?")

Layer 1 answers "what's exposed?" and "what can assume what?" for the cloud graph.

Layer 2 answers the question teams actually live in: Where is this code running, what version is live, and what can it reach?

How I built this

- •A CI/CD integration that runs on every build — captures commit → image digest → registry links as artifacts flow through the pipeline

- •A cronjob that periodically syncs live cluster state — workloads, pods, services, RBAC bindings, and network policies

What to ingest (minimal set with maximum payoff):

- •Workloads: Deployments/StatefulSets/DaemonSets/CronJobs, pod templates, labels/annotations

- •Placement: Pods → Nodes → Cluster (and Node → cloud instance if you want)

- •Exposure: Services, Ingress/Gateway, LoadBalancers, ports, hostnames

- •Identity: ServiceAccounts + RBAC bindings (+ cloud identity links like IRSA/workload identity)

- •Network intent: NetworkPolicies (or "none exist")

- •Supply chain: image digests, provenance (CI run → commit), SBOM/CVE summaries

- •Ownership: owner, service, tier, oncall (whatever you can standardize)

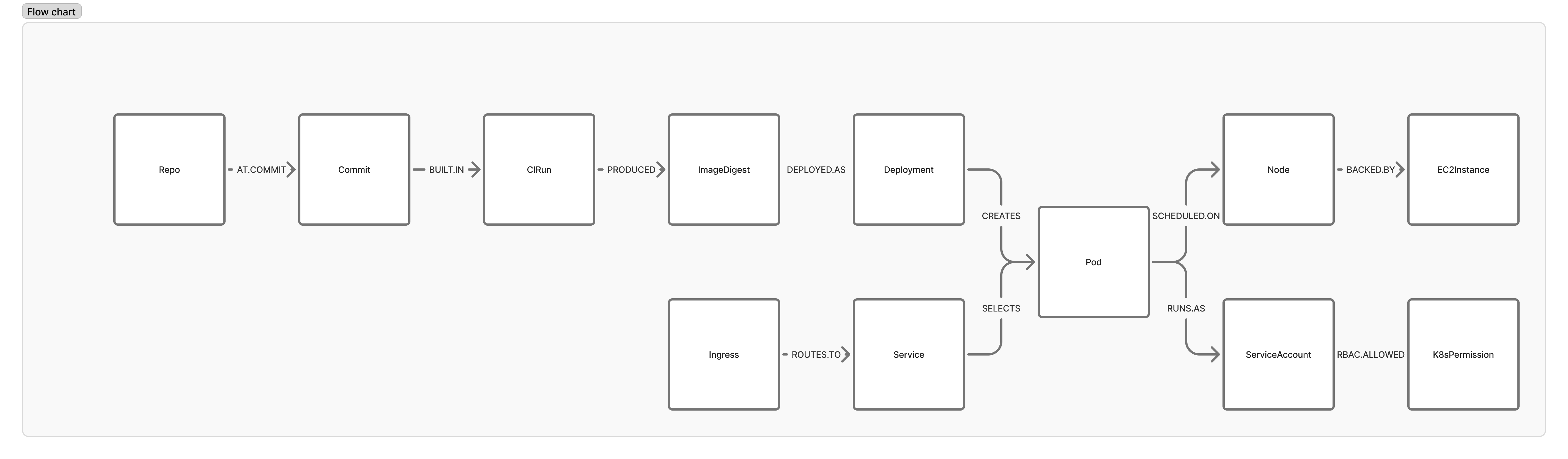

The "code → runtime" graph (conceptually):

// Source code to container image

(Repo)-[:AT_COMMIT]->(Commit)-[:BUILT_IN]->(CIRun)

(CIRun)-[:PRODUCED]->(ImageDigest)

// Image to running workload

(ImageDigest)-[:DEPLOYED_AS]->(Deployment)

(Deployment)-[:CREATES]->(Pod)-[:SCHEDULED_ON]->(Node)-[:BACKED_BY]->(EC2Instance)

// Traffic routing

(Ingress)-[:ROUTES_TO]->(Service)-[:SELECTS]->(Pod)

// Identity and permissions

(Pod)-[:RUNS_AS]->(ServiceAccount)-[:RBAC_ALLOWED]->(K8sPermission)MCP tools that become possible in Layer 2:

// commit → image digest → workloads → namespaces → clusters

where_is_this_code_running(repo: string, ref: string)

// "prod is running image sha256:… built from commit …"

what_version_is_live(service: string, env: string)

// Ingress/Gateway/LB inventory + what they route to

public_entrypoints(env?: string)

// routing chain + evidence

explain_service_exposure(service: string)

// reachable services/namespaces + identity/RBAC context

k8s_blast_radius(workload: string)

// RBAC paths to pods/exec and similar "uh oh" verbs

who_can_exec(namespace: string)

// images without provenance/SBOM, high CVEs, drift from allowed registries

supply_chain_risks(env?: string)The wow moment: "Where is this code running?"

The moment it clicked

I asked Claude Code: "Where is this code running?" (and waited for it to hallucinate).

Instead, it walked the graph:

- •repo → CI provenance → ECR repo + image digest

- •image digest → workloads/pods pulling it

- •pods → exact node/host

- •workload → egress/gateway path (how traffic leaves), with evidence you can paste into a ticket

Real-world win

Want early access?

I've built a first beta and I'm opening it up to a small group of people for early feedback.

Join the beta